Deploy a Highly Available Kubernetes Cluster on Rocky Linux 10 using kubeadm and Cilium

Table of Contents

1 Introduction

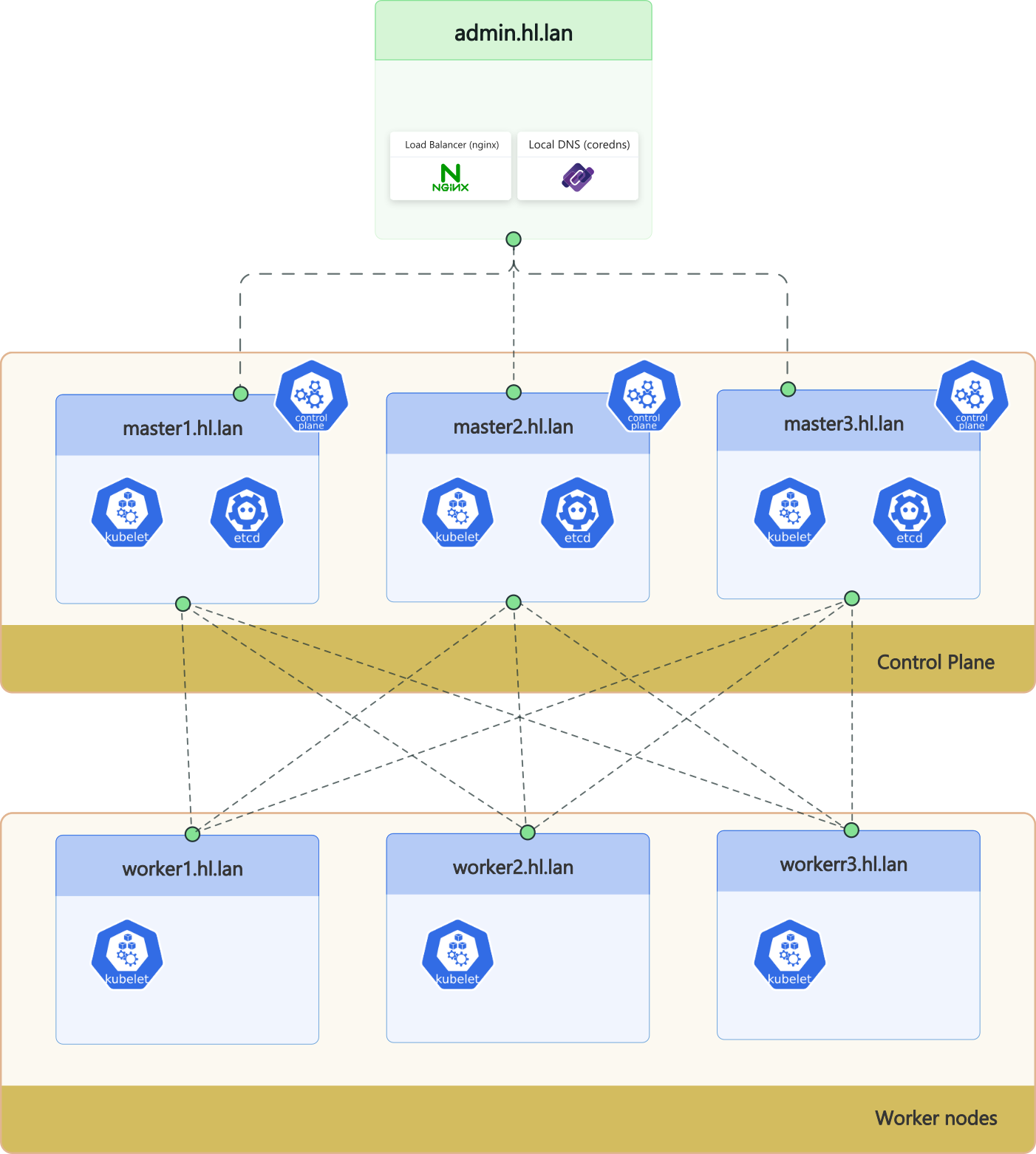

In this blog, I will describes how to deploy a highly available Kubernetes cluster on Rocky Linux 10 using kubeadm and Cilium as the CNI.

2 Topology

2.1 Node inventory (single source of truth)

| Role | Hostname | RAM (GB) | CPU Cores | IP | notes |

|---|---|---|---|---|---|

| admin | admin.hl.lan | 1G | 1 | 10.10.10.10 | nginx LB + CoreDNS |

| master | master1.hl.lan | 2G | 2 | 10.10.10.11 | control-plane + etcd |

| master | master2.hl.lan | 2G | 2 | 10.10.10.12 | control-plane + etcd |

| master | master3.hl.lan | 2G | 2 | 10.10.10.13 | control-plane + etcd |

| worker | worker1.hl.lan | 4G | 2 | 10.10.10.21 | kubelet + containerd + cilium |

| worker | worker2.hl.lan | 4G | 2 | 10.10.10.22 | kubelet + containerd + cilium |

| worker | worker3.hl.lan | 4G | 2 | 10.10.10.23 | kubelet + containerd + cilium |

2.2 DNS conventions

- lb.hl.lan points to the admin node (nginx) and is used as the control-plane endpoint.

- node hostnames use: master{1..3}.hl.lan and worker{1..3}.hl.lan

3 Configure admin node

Optional: Using /etc/hosts Instead of DNS

Before deploying the cluster, all nodes must be able to resolve each other by name.

The recommended approach is to deploy a local DNS server (as I will explain below).

However, if you want to pass this, you may choose to use /etc/hosts instead.

Be aware that this approach does not scale, and requires manual updates on every node, and also it's error-prone as the cluster grows

If you decide to go this route, the following entries must be present on every node.

10.10.10.10 admin.hl.lan lb.hl.lan 10.10.10.11 master1.hl.lan 10.10.10.12 master2.hl.lan 10.10.10.13 master3.hl.lan 10.10.10.21 worker1.hl.lan 10.10.10.22 worker2.hl.lan 10.10.10.23 worker3.hl.lan

3.1 Configure DNS on the Admin Node (CoreDNS)

Before deploying Kubernetes, we need a reliable internal DNS service. In this setup, the admin node will act as the DNS server for the homelab.

If you are looking for a highly available DNS solution, you can use BIND with failover. I previously covered this approach here:

In this environment, we will deploy CoreDNS as a single-instance DNS server. High availability is intentionally out of scope.

CoreDNS can be deployed in multiple ways:

- As a systemd service

- Or as a container

In our case, we will deploy CoreDNS using Docker Compose.

Docker Compose Definition

The following Compose file runs CoreDNS, exposes DNS on port 53 (TCP/UDP), and mounts both:

- The CoreDNS Corefile

- The BIND-compatible zone file for the homelab

services:

coredns:

image: coredns/coredns:latest

container_name: coredns

restart: always

ports:

- "53:53/tcp"

- "53:53/udp"

volumes:

- ./config/Corefile:/etc/coredns/Corefile:ro

- ./config/zones/db.hl.lan.zone:/etc/coredns/zones/db.hl.lan.zone:ro

command: "-conf /etc/coredns/Corefile -dns.port 53"

networks:

default:

external: true

name: admin-network

CoreDNS Configuration (Corefile)

CoreDNS uses a single configuration file called Corefile where you can define your zones. we define two zones in it:

- The

root zone (.)- Forwards all external DNS queries

- Uses public resolvers (Cloudflare, Google, …etc)

- The

homelab zone (hl.lan)- Serves us locally

- Uses a BIND-compatible zone file

.:53 {

forward . 1.1.1.1 8.8.8.8 8.8.4.4

log

errors

}

hl.lan:53 {

file /etc/coredns/zones/db.hl.lan.zone

reload

log

errors

}

Homelab Zone File (BIND-Compatible)

Below is the zone file defining the internal DNS records for hl.lan. CoreDNS natively understands BIND-style zone files, making migration and maintenance straightforward.

; vim: ft=bindzone ; ============================================================================ ; Zone File for hl.lan ; ============================================================================ ; Author : Zakaria Kebairia ; Organization : Homelab Technologies ; Purpose : Internal DNS zone served via CoreDNS ; ============================================================================ $ORIGIN hl.lan. $TTL 3600 ; ---------------------------------------------------------------------------- ; SOA & NS ; ---------------------------------------------------------------------------- @ IN SOA dns.hl.lan. infra.hl.com. ( 2025102801 ; Serial (YYYYMMDDNN) 7200 ; Refresh 3600 ; Retry 1209600 ; Expire 3600 ; Minimum ) @ IN NS dns.hl.lan. ; ---------------------------------------------------------------------------- ; Infrastructure Nodes ; ---------------------------------------------------------------------------- dns IN A 10.10.10.10 master1 IN A 10.10.10.11 master2 IN A 10.10.10.12 master3 IN A 10.10.10.13 worker1 IN A 10.10.10.21 worker2 IN A 10.10.10.22 worker3 IN A 10.10.10.23 ; ---------------------------------------------------------------------------- ; Aliases ; ---------------------------------------------------------------------------- lb IN CNAME dns.hl.lan. admin IN CNAME dns.hl.lan. ; ---------------------------------------------------------------------------- ; Notes ; ---------------------------------------------------------------------------- ; - Always bump the serial after changes. ; - SOA email infra.hl.com. maps to infra@hl.com ; - Prefer A records for nodes, CNAMEs for logical services. ; ----------------------------------------------------------------------------

Deploy CoreDNS

Since I used a named network in docker compose, you need to create it using docker network create admin-network

Once all configuration files are in place, start the service in detach mode:

docker compose up -d

At this point, CoreDNS is running and listening locally on port 53, you can check that with the following command.

ss -tlpen | grep 53

Configure the Admin Node to Use CoreDNS

Finally, configure the admin node to use itself as the DNS resolver. We also need to disable DHCP-provided DNS to avoid overrides.

sudo nmcli connection modify eth0 \ ipv4.dns "127.0.0.1" \ ipv4.ignore-auto-dns yes \ ipv4.dns-search "hl.lan" # Apply changes sudo nmcli connection down eth0 sudo nmcli connection up eth0

Configure the masters/workers Node to Use CoreDNS

Also, we need to set the admin node as the DNS resolver for all other nodes.

sudo nmcli connection modify eth0 \ ipv4.dns "10.10.10.10" \ ipv4.ignore-auto-dns yes \ ipv4.dns-search "hl.lan" # Apply changes sudo nmcli connection down eth0 sudo nmcli connection up eth0

Ensure lb.hl.lan resolves from all nodes before running kubeadm init or any join command.1

Firewall configuration

sudo firewall-cmd --add-port=53/tcp --permanent sudo firewall-cmd --reload

3.2 Configure NGINX as a Load Balancer for the Kubernetes API

Because we are deploying a highly available Kubernetes cluster with multiple control-plane nodes, we need a load balancer in front of the Kubernetes API.

Any reverse-proxy or load-balancing technology can be used here. HAProxy is a very common and a good choice. But for change, I will use NGINX :).

It is important to highlight that the Kubernetes API requires TCP load balancing. This means Layer 4 (L4) load balancing — not Layer 7 (HTTP). NGINX provides this capability through its stream module.

Install NGINX (with Stream Module)

On Rocky Linux, the stream module is provided as a separate package.

sudo dnf install -y nginx nginx-mod-stream

NGINX Stream Configuration (Kubernetes API)

First, back up the default configuration file and open a new one.

sudo mv -v /etc/nginx/nginx.conf /etc/nginx/nginx.conf.bk sudo vim /etc/nginx/nginx.conf

I found that I need to load the ngx_stream_module using the absolute path

Then use the following configuration:

load_module /usr/lib64/nginx/modules/ngx_stream_module.so; worker_processes 1; events { worker_connections 1024; } # ------------------------------------------------------------------- # TCP load balancing for Kubernetes API (no TLS termination here) # ------------------------------------------------------------------- stream { upstream k8s_api { least_conn; server 10.10.10.11:6443; server 10.10.10.12:6443; server 10.10.10.13:6443; } server { listen 6443; proxy_pass k8s_api; proxy_connect_timeout 5s; proxy_timeout 10m; } }

This configuration:

- Listens on port 6443 on the admin node

- Distributes traffic across all control-plane nodes

- Uses a simple least connections load-balancing strategy

Run NGINX as a Systemd Service

For this setup, NGINX is deployed as a systemd service. This avoids unnecessary Docker networking complexity and keeps the load balancer tightly coupled to the host network.

Before starting NGINX, always validate the configuration:

sudo nginx -t

Then enable and start the service:

sudo systemctl enable --now nginx sudo systemctl is-active nginx

active

Firewall configuration

sudo firewall-cmd --add-port=6443/tcp --permanent sudo firewall-cmd --reload

4 Kubernetes Installation

4.1 Configuration for all nodes (masters and workers)

Disable swap:

swapoff -a

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

Verify swap is disabled:

swapon --show

# (no output)

SELinux permissive (bootstrap-friendly):

setenforce 0

sed -i --follow-symlinks 's/SELINUX=enforcing/SELINUX=permissive/g' /etc/sysconfig/selinux

getenforce

Permissive

Kernel modules + sysctl:

tee /etc/modules-load.d/containerd.conf <<EOF overlay br_netfilter EOF modprobe overlay modprobe br_netfilter cat <<EOF > /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.ipv4.ip_forward = 1 EOF sysctl --system

Predictable checks:

lsmod | grep -E 'overlay|br_netfilter'

br_netfilter 32768 0 overlay 94208 0

Install container runtime (containerd)

dnf config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

dnf install -y containerd.io

containerd config default | tee /etc/containerd/config.toml >/dev/null

sed -i 's/SystemdCgroup = false/SystemdCgroup = true/' /etc/containerd/config.toml

systemctl restart containerd

systemctl enable containerd

systemctl is-active containerd

active

We enable systemd cgroups for kubelet compatibility.2

Firewall configuration

- Master nodes firewall configuration

firewall-cmd --permanent \ --add-port={6443,2379,2380,10250,10251,10252,10257,10259,179}/tcp firewall-cmd --permanent --add-port=4789/udp firewall-cmd --reload firewall-cmd --list-ports6443/tcp 2379-2380/tcp 10250-10259/tcp 179/tcp 4789/udp

These ports cover API server, etcd, and control plane components.3

- Worker nodes firewall configuration

firewall-cmd --permanent \ --add-port={179,10250,30000-32767}/tcp firewall-cmd --permanent --add-port=4789/udp firewall-cmd --reload firewall-cmd --list-ports10250/tcp 179/tcp 30000-32767/tcp 4789/udp

NodePort range must be reachable from inside the cluster (and from clients if you expose NodePorts).4

Install Kubernetes tools (kubeadm/kubelet/kubectl)

cat <<EOF | tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://pkgs.k8s.io/core:/stable:/v1.35/rpm/

enabled=1

gpgcheck=1

gpgkey=https://pkgs.k8s.io/core:/stable:/v1.35/rpm/repodata/repomd.xml.key

exclude=kubelet kubeadm kubectl cri-tools kubernetes-cni

EOF

dnf install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

systemctl enable --now kubelet

systemctl is-active kubelet

active

Deploy the first master (bootstrap)

Run this on master1.hl.lan

kubeadm init \ --kubernetes-version "1.35.0" \ --pod-network-cidr "10.244.0.0/16" \ --service-dns-domain "hl.lan" \ --control-plane-endpoint "lb.hl.lan:6443" \ --upload-certs

Your Kubernetes control-plane has initialized successfully! To start using your cluster, run: mkdir -p $HOME/.kube cp /etc/kubernetes/admin.conf $HOME/.kube/config You can now join any number of control-plane nodes by running the following command...

The cluster exists, but networking is not ready until we install a CNI.5

Configure kubectl config file (on master1).

mkdir -p $HOME/.kube cp /etc/kubernetes/admin.conf $HOME/.kube/config chown $(id -u):$(id -g) $HOME/.kube/config kubectl get nodes

NAME STATUS ROLES AGE VERSION master1.hl.lan NotReady control-plane 1m v1.35.0

NotReady is expected before the CNI is installed.

4.2 Install Cilium CNI

Cilium should be installed before joining other nodes.5

Install Helm (admin node or master1)

helm version

version.BuildInfo{Version:"v3.15.4"}

Add Cilium repository

helm repo add cilium https://helm.cilium.io/ helm repo update

Install Cilium (kube-proxy replacement)

helm install cilium cilium/cilium \ --namespace kube-system \ --version "1.18.5" \ --set kubeProxyReplacement=true \ --set k8sServiceHost="lb.hl.lan" \ --set k8sServicePort="6443" \ --set hubble.enabled=true \ --set hubble.relay.enabled=true \ --set hubble.ui.enabled=true

NAME: cilium STATUS: deployed

Cilium uses eBPF for service routing and policy enforcement.6

Wait for Cilium to be ready

kubectl -n kube-system rollout status ds/cilium

daemon set "cilium" successfully rolled out

kubectl get nodes

NAME STATUS ROLES AGE VERSION master1.hl.lan Ready control-plane 6m v1.35.0

4.3 Join additionaL control plane nodes

On master1, generate the join command:

kubeadm token create --print-join-command

kubeadm join lb.hl.lan:6443 --token <token> --discovery-token-ca-cert-hash sha256:<hash>

Then on master1, retrieve the certificate key (required for control-plane join):

kubeadm init phase upload-certs --upload-certs

[upload-certs] Using certificate key: <cert-key>

Now on master2 and master3, run:

kubeadm join lb.hl.lan:6443 \ --token <token> \ --discovery-token-ca-cert-hash sha256:<hash> \ --control-plane \ --certificate-key <cert-key>

This node has joined the cluster as a control-plane node

Each control-plane node runs an API server and joins the etcd quorum.7

4.4 Join worker nodes

On each worker:

kubeadm join lb.hl.lan:6443 \ --token <token> \ --discovery-token-ca-cert-hash sha256:<hash>

This node has joined the cluster

Workers run workloads and do not participate in etcd.8

5 Post-installation checks

5.1 Verify nodes

kubectl get nodes -o wide

NAME STATUS ROLES VERSION OS-IMAGE master1.hl.lan Ready control-plane v1.35.0 Rocky Linux 10.1 (Red Quartz) master2.hl.lan Ready control-plane v1.35.0 Rocky Linux 10.1 (Red Quartz) master3.hl.lan Ready control-plane v1.35.0 Rocky Linux 10.1 (Red Quartz) worker1.hl.lan Ready <none> v1.35.0 Rocky Linux 10.1 (Red Quartz) worker2.hl.lan Ready <none> v1.35.0 Rocky Linux 10.1 (Red Quartz) worker3.hl.lan Ready <none> v1.35.0 Rocky Linux 10.1 (Red Quartz)

5.2 Verify core pods

kubectl get pods -n kube-system

cilium-... Running cilium-operator-... Running coredns-... Running hubble-relay-... Running hubble-ui-... Running

5.3 Verify Cilium status

cilium status

Cilium: OK Hubble: OK Cluster health: OK

5.4 Quick functional test (DNS + connectivity)

kubectl run -it --rm --restart=Never dns-test --image=busybox:1.36 -- nslookup kubernetes.default

Name: kubernetes.default Address: 10.96.0.1

This verifies that CoreDNS is working and that service routing is operational.

Footnotes:

If nodes cannot resolve lb.hl.lan, joining will fail or kubelet will flap because API connectivity is not stable.

Systemd cgroups prevent resource accounting mismatches between kubelet and containerd.

Required for API server, etcd quorum communication, control-plane components, and (optionally) BGP.

NodePort services are exposed through a fixed TCP range on worker nodes.

Nodes become Ready only after the CNI is installed. Installing Cilium early prevents scheduling/networking race conditions.

Cilium uses eBPF instead of iptables, improving performance, observability, and security primitives (policies, visibility).

Control-plane nodes run API server, controller-manager, scheduler, and participate in the etcd quorum.

Worker nodes run workloads (pods), kubelet, container runtime, and Cilium agent; they do not participate in etcd.